Data extraction and synthesis

Title screening removed papers without a direct connection to the SDGs, such as theoretical topics in immunology and vaccinology, vaccine efficacy and clinical trials, technical papers on human or veterinary vaccine development, and papers related to cybersecurity.

Abstract screening mainly removed papers on livestock immunization or detailed human immunology. Similarly, papers that only briefly listed the sustainability aspect in the limitations section of their research were excluded at this point. in terms of eligibility, papers dealing with models and methods that qualify as applicable and relevant for decision-makers, implementers, and other stakeholders were included. the insights from all the resulting papers were extracted in excel for qualitative synthesis. The inclusion criteria were based on Kovacs and Moshtari [9] and Besiou, Stapleton, and Van Wassenhove [10], as shown in Table 2.

https://pubmed.ncbi.nlm.nih.gov/34446050/

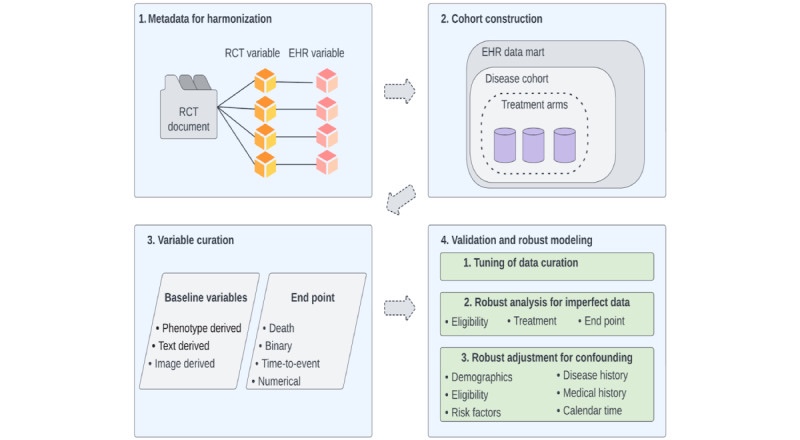

Generate Analysis-Ready Data for Real-world Evidence: Tutorial for Harnessing Electronic Health Records With Advanced Informatic Technologies https://pubmed.ncbi.nlm.nih.gov/37227772/

Real-World Evidence: What It Is and What It Can Tell Us According to the International Society for Pharmacoepidemiology (ISPE) Comparative Effectiveness Research (CER) Special Interest Group (SIG) https://ascpt.onlinelibrary.wiley.com/doi/10.1002/cpt.1086

CONFLICT OF INTEREST

Dr. Yuan and Dr. M. Sanni Ali have nothing to disclose. Dr. Brouwer reports employment at Shire Pharmaceuticals, formerly employed at Boehringer Ingelheim Pharmaceuticals. Submitted work completed as a part of extracurricular involvement as chair of ISPE Comparative Effectiveness Research Special Interest Group. Dr. Girman reports grants from pharmaceutical companies including CSL Behring, Roivant, Adgero, Regeneron, Prolong, Ritter, CytoSorbents, Boehringer-Ingelheim, outside the submitted work; and serves on the Methodology Committee of the Patient-Centered Outcomes Research Institute, and ex-officio on their Clinical Trials Advisory Panel. Dr. Guo did not receive any financial support or material gain that may involve the subject matter of the article. Dr. Guo was a principal or co-investigator on research studies sponsored by Novartis, AstraZeneca, Bristol-Myers Squibb, Janssen Ortho-McNeil, Roche-Genentech, and Eli Lilly. None of them were involved in this article development. Dr. Lund reports that her spouse is a full-time, paid employee of GlaxoSmithKline. Elisabetta Patorno was supported by a career development grant K08AG055670 from the National Institute on Aging and reports research funding from GSK and Boehringer-Ingelheim, outside the submitted work. Dr. Slaughter and Dr. Wen have nothing to disclose. Dr. Bennett is an employee of Takeda Pharmaceuticals International Co.

- Benzodiazepine use in medical cannabis authorization adult patients from 2013 to 2021: Alberta, Canada.Dubois C, et al. BMC Public Health. 2024.PMID: 38504198 Free PMC article.

- Active Vaccine Safety Surveillance: Global Trends and Challenges in China.Liu Z, et al. Health Data Sci. 2021. PMID: 38487501Free PMC article. Review.

High-dimensional Propensity Score Adjustment in Studies of Treatment Effects Using Health Care Claims Data

Schneeweiss, Sebastian; Rassen, Jeremy A.; Glynn, Robert J.; Avorn, Jerry; Mogun, Helen; Brookhart, M Alan

Ramirez-Rubio O, et al. Global Health. 2019. PMID: 31856877 Free PMC article.

Global health research partnerships in the context of the Sustainable Development Goals (SDGs). Addo-Atuah J, et al. Res Social Adm Pharm. 2020. PMID: 32893133 Free PMC article.

Multisectoral action towards sustainable development goal 3.d and building health systems resilience during and beyond COVID-19: Findings from an INTOSAI development initiative and World Health Organization collaboration. Hellevik S, et al. Front Public Health. 2023. PMID: 37275502 Free PMC article.

• Addressing Policy Coherence Between Health in All Policies Approach and the Sustainable Development Goals Implementation: Insights From Kenya. Mauti J, et al. Int J Health Policy Manag. 2022. PMID: 33233034 Free PMC article. Review.

IMMUNIZATION AGENDA 2030

A global strategy to leave no one behind🚨

Advancing sustainable development goals through immunization: a literature review – PubMed

https://pubmed.ncbi.nlm.nih.gov/38623549/

Epidemiology 20(4):p 512-522, July 2009. | DOI: 10.1097/EDE.0b013e3181a663cc

Output of a screening tool for close correlates of treatment choice that are not related to the study outcome (coxib example). eElectronic Appendix 1: https://cdn-links.lww.com/permalink/ede_20_4_schneeweiss_200614_sdc1.pdf

Live biotherapeutic products and their regulatory framework in Italy and Europe PMID: 36974706

• DOI: 10.4415/ANN_23_01_09

Clinical Pharmacology and Therapeutics

Clin Pharmacol Ther. 2020 Apr; 107(4): 843–852.

Published online 2019 Nov 14. https://doi.org/10.1002/cpt.1658

PMCID: PMC7093234

NIHMSID: NIHMS1052743

PMID: 31562770

Examining the Use of Real‐World Evidence in the Regulatory Process

Brett K. Beaulieu‐Jones, 1 Samuel G. Finlayson, 1William Yuan, 1 Russ B. Altman, 2 Isaac S. Kohane, 1Vinay Prasad, 3 and Kun‐Hsing Yu 1

Author information Article notes Copyright and License information

This article has been cited by other articles in PMC.

Real‐world data (RWD) and real‐world evidence (RWE) have received substantial attention from medical researchers and regulators in recent years.1, 2 The US Food and Drug Administration (FDA) defines data relating to patient health status and the delivery of healthcare (such as electronic health records (EHRs), claims and billing activities, product and disease registries, and patient‐generated data) as real‐world data (RWD), and the analysis of these data regarding usage and effectiveness are termed real‐world evidence (RWE).3 The European Medicines Agency (EMA) similarly defines RWD as defined as “routinely collected data relating to a patient’s health status or the delivery of health care from a variety of sources other than traditional clinical trials” and expressed interest in using RWD for regulatory decision making.4 RWE presents great potential to accelerate therapy development and to monitor the successes and failures or both newly approved and existing therapies.5 It is critical that stakeholders, including researchers, both academic and industry, providers, regulators, administrators, and patients understand the limitations of RWE. RWE is not generated with a particular study question in mind but are generated primarily for clinical care and billing purposes. As such, appropriate use of RWE must be driven by well‐designed guidelines and regulations to ensure accurate, unbiased findings. If used correctly, RWE could supplement traditional clinical research to aid therapeutic development, clinical decision making efficiency gains in healthcare, and improved access to therapeutics for underserved populations. Examples of promise in each of these areas can be seen in the results of the EMA’s adaptive pathways pilot.6 If used incorrectly, RWE could lead to spurious approvals, financial waste, and most importantly cause harm to patients.

To date, RWE has been used primarily to perform postmarketing surveillance to monitor drug safety and detect adverse events. RWE has also been particularly effective when the outcome of interest is rare, in cases where a very long follow‐up period is required to assess the health outcomes, or when it is difficult to perform randomized controlled trials (RCTs), such as in pediatric or pregnant populations. An early example was the discovery of a link between the ingestion of diethylstilbestrol during pregnancy and vaginal adenocarcinoma of the offsprings using observational data.7 More recent studies linked the use of angiotensin‐converting enzyme inhibitors while pregnant to congenital malformations8 and the exposure of selective serotonin reuptake inhibitors to persistent pulmonary hypertension in newborns.9, 10 Most recently, RWE has shown postmarketing evidence that it may have an important role to play in understanding drug effectiveness and adverse events based on differences of metabolism in various racial and genetic groups.11, 12, 13, 14

There is a growing interest in the usage of RWE by regulatory agencies to evaluate the safety and efficacy of medical treatments.2, 5, 15, 16, 17 In particular, Congress has mandated that the FDA increase focus on RWE for regulatory decision making both for new approvals and evaluating additional indications for approved therapies18and the FDA has testified on progress toward implementing this focus.19 The EMA recently accepted an RWE‐based control arm during their analysis of Alecensa effectiveness compared with the standard of care.20, 21, 22 In addition, the FDA has established partnerships with private companies whose goal is to use RWD in regulatory decision making, including using synthetic control arms.20

Although some have been enthusiastic about the ability for observational RWD to substitute for RCTs, others have expressed caution. Booth et al.23 contend that RWD should not be used as a replacement for clinical trials due to the inability to compare outcomes of nonrandomized groups. A recent comprehensive empirical analysis of treatments in oncology confirms this finding. The results on replicating clinical trials in observational data are highly mixed, Concato et al.24 concluded that well‐designed observational trials closely estimated the effects of treatment when compared with RCTs on the same subject. On the other hand, Soni et al.25 found a poor correlation between the hazard ratio seen in observational studies vs. randomized trials on the same topic. There is limited evidence that some RCTs may be difficult to replicate.26, 27, 28 This could be due to population and effect sizes, population demographics, or other factors.

RWE may provide valuable insight into the effectiveness and generalizability of interventions in practice, even as RCTs are unlikely to be supplanted as the gold standard for measuring intervention efficacy. It is important to consider the higher level of evidence RCTs provide than RWE when making a regulatory decision.29 We should aim to use the highest standard possible while acknowledging it is not feasible for RCTs to answer all clinical questions related to drug effectiveness. The reasons where RCT might not be feasible include (i) prohibitive cost,30, 31 (ii) when the standard of care is effective and/or administering a placebo is unethical,32 and (iii) in rare diseases where patient recruitment is challenging.33, 34, 35 In addition, RCTs are typically performed in a relatively homogenous cohort that is less diverse than the real‐world population in terms of age, race, socioeconomic status, geography, clinical setting, disease severity, patient history, and patient willingness to seek treatment.36, 37, 38, 39, 40 Finally, over time, indications for therapeutics often expand to indications and population groups they were not originally tested in. Pragmatic and other modern trial designs may mitigate some of these challenges, for example, by improving generalizability, but fundamental issues of cost, time, and difficult recruitment remain.41Pharmaceuticals have been approved without RCTs but should be limited to cases where the potential burden of an incorrect treatment estimation is outweighed by the burden of conducting an RCT.42 It is critical to utilize alternatives to supplement but not supplant RCTs both in the form of pragmatic trials and RWE‐based analyses.

The FDA has taken action in an attempt to reduce the burden in both time and cost of bringing a new therapy to market, through the accelerated regulatory decision regulations initially put in place in 1992 and expanded in 2012.43 These regulations allow for surrogate and intermediate end points when a therapy addressed a serious condition without existing options. In the 5 years following final guidance from the FDA in May 2014, 71 therapies have been approved through the accelerated pathway.44 This is in comparison to 25 in the 5 years prior to this guidance. In addition, in 2012, the FDA established the “breakthrough therapy designation” for therapies intended to treat serious or life‐threatening conditions where the therapy may demonstrate substantial improvement.45 From April 2015 to March 2019, 24 drugs have received breakthrough therapy designation. Due to the fact that clinical end points (i.e., outcomes that show direct clinical benefits, such as increased overall survival) may take a long time to develop, many drug trials with breakthrough therapy designation use surrogate end points, which are measurements or signs predictive of clinical outcomes but do not directly measure clinical benefits.46, 47 Examples of surrogate end points include blood pressure for hypertension drugs and serum low‐density lipoprotein cholesterol for hypercholesterolemia treatments.47 Shorter trials and the use of surrogate end points present a strong need for postapproval surveillance for both safety and effectiveness, especially in the context of traditional clinical end points. The use of shorter trials and surrogate end points to accelerate regulatory decisions may suggest that the traditional process is unnecessarily slow and wasteful. However, it is also possible that the extensive use of these shortcuts will lead to suboptimal decisions because there is no guarantee improvement as measured by surrogate end points will translate to traditional end points.

In this light, we ask the question: “What is the role of RWE in the regulatory process?” In this review, we first lay out some of the primary reasons RWE is not suited to replace RCTs. We then examine some areas RWE is well‐suited to supplement and enhance the regulatory process as well as providing postapproval guidance.

Limitations for RWE for Regulatory Decision Making

Here, we examine the limitations of commonly used methods for applying RWD to inform regulatory decision making. We focus on the limitations of RWE to be used in the comparative effectiveness analyses conducted in phase II and phase III RCTs. We, therefore, narrowly examine three types of studies that have been proposed as ways to perform these comparative effectiveness analyses of therapies from RWD: (i) Virtual Comparative Effectiveness Studies ascertain outcomes in both an intervention and control group from an RWD source.48, 49 (ii) Studies using Historical Control Arms compare retrospective RWD‐derived controls against an uncontrolled treatment arm.50 (iii) Studies using Synthetic (Real‐World) Control Arms pair an uncontrolled treatment arm with concurrent RWD controls.22

Each of the three study designs of RWE has significant issues that prevent the ability to achieve a level of evidence on par with RCTs. Because of challenges in drawing causal conclusions of treatment efficacy from RWE they are not suited to replace RCTs. Table 1 shows how the specific limitations discussed apply to each form of study design. Most of these mechanisms to use RWE are affected by multiple limitations. Below, we describe these concerns and then link them to each of these three forms of evidence.

A. Unobserved confounders

Of the sources of RWD, the EHR is generally considered to provide the most granular view of patient care; although insurance claims may provide a higher level of completeness of care. Neither of these data sources is designed for secondary analysis: EHR is primarily intended for patient care, whereas claims data is designed for financial billing and reimbursement. As a result, there are potentially unobserved factors influencing a physician’s decision to pursue a particular course of treatment in a systematic manner, preventing the direct comparison of outcomes between treatment arms or direct comparisons to RCT findings. Traditionally, these factors are addressed using randomization because random treatment assignment would not allow for systematic differences between exposed/nonexposed arms. This is not possible using observational data. Several examples of these factors include:

- Physician opinion. A physician may have just read a paper or attended a seminar recommending a particular treatment, may have seen the treatment work well for a patient that they deem similar, or they may simply have a gut feeling that a treatment is right for a patient. Pessimistically, pharmaceutical companies may exert influence regarding the choice of treatment.51, 52 This influence can often be associated with patient characteristics.

- Patient request. A patient, through his or her own research or through advertisement, may request a specific course of treatment.53, 54 This bias is particularly evident in cases when the patient is attempting to optimize a different outcome from the trial (e.g., shared decision making).55

- Knowledge of a trial. Patients who choose to enroll in a trial are likely to be distributed differently than the general disease population. Similarly, clinicians participating in a trial or prescribing an off‐label drug are likely to make different choices regarding patient care. In particular, there is some evidence that physicians are more likely to attempt experimental treatment37, 40 in those who appear healthier. For this reason, unobserved confounders can affect all three considered uses of RWE.

- Differential access to treatment. The availability or ease of offering a particular treatment may be dependent on administrative, logistical, or insurance coverage‐based barriers.56 These differences are magnified by the integration of multisite and geographically diverse data into observational studies. Consistent annotation or quantification of these factors is not a central theme within RWD datasets, nor are the magnitudes and directions of these effects on physician behavior completely understood.

B. Medicine changes over time

Historical control arms are by nature historical and as new treatments and technologies, new guidelines, and environmental or socioeconomic changes are introduced medicine changes. This was demonstrated by Sacks et al.50 in 1982 when they showed that 80% (44 of 56) historical control trials found the treatment of interest better than the control, but only 20% of RCTs agreed. For six different clinical areas, they found the results of the trials were more dependent on the method of control groups than on the therapy being considered. In a similar vein, Zia et al.57 identified 43 phase III clinical trials that used identical therapeutic regimens to their corresponding phase II study. Only 28% of the phase III studies were “positive” and 81% had lower effect sizes than their corresponding phase II study. The effect of time trends in medicine is evident even over the course of a single outcome‐adaptive trial.58, 59Outcome‐adaptive trials work by adjusting treatment assignment probabilities based on which treatment arm is doing better in order to subject as many participants as possible to the most promising treatment. When a treatment arm seems promising at the beginning of a trial, patients are disproportionately enrolled in the promising arm. This means that the average date of enrollment can be much later in some arms than others.

C. Trials may change participant and provider behavior—the “Hawthorne Effect”

Clinical trial protocols may result in different behavior than typical clinical practice. Although difficult to measure, there is weak evidence of a protocol or “Hawthorne effect” leading to participants of clinical trials having better outcomes than typical clinical practice.60McCarney et al.61 performed a placebo‐based randomized trial in dementia, which found that participants receiving more frequent follow‐up visits achieved better cognitive and carer‐rated quality‐of‐life outcomes. Similarly, behavioral changes, such as the more frequent follow‐up of clinical trial participants, may result in better medication adherence than real‐world settings.62In traditional RCTs, both arms of the trial may experience the Hawthorne effect. When using historic or synthetic controls only the traditional intervention arm would experience the Hawthorne effect.

D. Closer monitoring of adverse effects in trials

The combination of more frequent follow‐up and specific attention paid to adverse drug events may lead to lower rates of adverse drug events in routine clinical practice compared with clinical trials. In addition, relatively minor adverse effects may not be billed against or recorded in the context of more serious diagnoses (e.g., nausea on a chemotherapy protocol). Several studies have shown that harm in oncology RCTs is underreported and may not even follow protocols.63 RWE may underestimate important patient safety concerns.

E. Lack of pretrial registration and multiple testing

It is critical that clinical trials be registered prior to any analysis of results that occur to prevent unreported multiple testing.64, 65 Many statistical methods have been proposed to minimize false discovery in studies involving testing multiple hypotheses simultaneously, and the need for correction is well recognized in biomedical research. However, some forms of multiple testing are more difficult to identify. As an illustration, there are several widely distributed datasets (e.g., Truven MarketScan, Optum Claims data, etc.), and it is likely that multiple investigators will ask similar questions using these datasets. The multiple testing nature of large and uncoordinated efforts may not be apparent to individual investigators involved nor to the scientific community, and the likelihood of false positives would remain uncorrected.66 To highlight this issue, Silberzahn et al.67 distributed the same dataset to 29 teams with a total of 61 data analysts and asked the question, “Are soccer referees more likely to give red cards to dark‐skin‐toned players than light skin‐toned‐players?” Twenty of the 29 teams found a positive effect, the other 9 found no significant relationship and the estimated effect sizes ranged from 0.89–2.93. This study makes explicit a phenomenon that is largely hidden from view, multiple chances and analytical approaches to explore an observational hypothesis may result in different point estimates. Given the financial stakes in regulatory outcomes, there are strong incentives for reporting of positive results. Because explorational analyses may be performed prior to registration or in the absence of registration, multiple hypothesis testing may plague RWE efforts. Preregistration of methods in RCT studies prevents this type of manipulation, both deliberate and inadvertent, and provides a measure of methodological transparency.

F. Weaknesses of propensity score methods

Propensity score matching and adjustment are popular approaches to account for high‐dimensional confounders in observational studies.68 In propensity score matching, researchers construct a matched population of treated and nontreated individuals based on their probabilities of receiving the treatment. By pairing every treated patient with one or more nontreated patients that were roughly equally likely to have received the treatment, propensity score matching seeks to balance the underlying factors associated with treatment assignment. In propensity score adjustment, the high‐dimensional confounders are summarized by a propensity score, which can then be adjusted in downstream analyses.68, 69

In practice, propensity score methods are difficult to properly execute and evaluate.70 For instance, it is very difficult to determine if a given propensity score model has been correctly specified. The traditional method for evaluating predictive performance fails to properly evaluate the quality of a propensity score model because unmeasured confounding and the randomness in treatment assignment both contribute to the deviation of the model from the observed data. As such, it can be difficult for a reader to determine whether the model has sufficiently adjusted for confounding.70

The deployment of the propensity score is also not straightforward. Because propensity scores from two patients are rarely exactly equal, defining “close enough” propensities for two patients to count as a match involves a delicate balance between excessively loose cutoffs (which risks undercutting the notion of matching itself) and highly stringent cutoffs (which may exclude too many patients from the analysis). The process of propensity matching almost inevitably changes the population being studied in ways difficult to interpret by systematically excluding some subset of patients that fail to achieve a proper match in the other “arm” of the analysis. Ultimately, this means that propensity score matching may provide causal estimates about the effect of the intervention on a different population than the study originally sought to investigate.

G. Inability to compare RWD preapproval of the experimental treatment

The efficacy of a treatment cannot be evaluated from RWD until it is used widely enough for there to be a substantial body of data. In general, this means that treatment needs to already be approved for the indication of interest. In rare cases, there may be significant off‐label usage, but the reasoning behind this off‐label usage should be carefully considered in terms of its impacts on patient selection.71, 72 Significant differences between the patients receiving the off‐label treatment vs. the existing standard of care could exist, resulting in issues of generalizability. The inability to compare observations preapproval to observations in the general population is especially pertinent to the experimental arm, but there may also be subtle changes in the standard of care that can be difficult to identify from RWD (e.g., dosing, timing, and adherence).73, 74 Finally, off‐label prescription with the hope of generating RWD is an inefficient mechanism of hypothesis testing, which is often optimized by formal trials.

H. Opportunity for conflict of interest

Therapy approval decisions are binary outcomes with the potential for profound impact on patients, employees, shareholders, and other stakeholders. Because of this, there represents an outsized incentive for a variety of parties to cheat the system, regardless of the study design. It was recently revealed that there was “data manipulation” in the data provided to the FDA during the approval process for Zolgensma.75, 76In RWE, this poses a potentially bigger issue given the retrospective nature of data. The bar for exploitation is lower than prospective studies and exploitation becomes less black and white. It is not possible to ensure that trial organizers have not already analyzed the data to ensure that the control arm is penalized. This could be done through manipulation of the inclusion and exclusion criteria, the method of propensity matching between the trial subjects and the subjects derived from RWD or other intentional selections. For example, Sacks et al.50 found that historical control groups generally did significantly worse than RCT control groups across 50 reported clinical trials. The retrospective population selection task exhibits the opportunity for exploitation that is difficult to uncover, especially when considering potential financial implications. Although there is the opportunity for bad actors to cheat the system regardless of study type, RWE‐based studies can be performed by a smaller set of investigators where there are less exposure and transparency to the protocol.

I. Completeness of data and loss of follow‐up

Of the three most commonly used sources of RWD, EHR and hospital‐based administration both suffer from the fact that they do not record any care received outside of a particular hospital system and oftentimes contain incomplete regard with respect to a particular EHR (e.g., inpatient vs. ambulatory). This leads to challenges in ensuring completeness of care in RWD‐based studies using EHR data. In addition, over 50% of the United States receives healthcare insurance through their employment.77 The average tenure of employment is just 4.3 years for men and 4 years for women.78 This short tenure means even insurance claims datasets present challenges when considering the completeness of care and follow‐up coverage. Record incompleteness, defined as instances when fewer than 50% of enrollees have at least one claim in a given year, can be caused by administrative phenomena, such as company or record mergers, as well as subcontracting of service.79

J. Measurement error in identifying patient status from RWD

EHRs and administrative data were not initially intended for specific studies, thus, these records may not be sufficiently granular to ascertain the phenotypic status of the patients. Researchers need to make additional assumptions or resort to proxy measures to infer the disease status of the patients under study. Additionally, previous studies showed that different hospitals have dissimilar approaches of disease and procedure coding, even when using the same standard lexicon of diagnostic and procedure codes. Data harmonization efforts and calibration studies are necessary to enhance the accuracy of inferring patient statuses using the limited, and sometimes inconsistent, descriptors in the RWD.

The Way Forward: How Can We Capture Value From RWE to Effectively Improve Patient Treatment?

Although RWD analyses are susceptible to the biases and issues summarized above, they hold promise in complementing trial analyses and enable the evaluation of numerous biological hypotheses at a minimal cost. Below, we discuss the approaches that could maximize the value and potential of RWE with particular attention to the approval and postapproval surveillance processes (Table 2).

Integrate results from multiple designs of observational studies to triangulate effect estimates

As discussed in the previous section, RWE generated from different study designs suffers from different sources of biases, and causal inference based on RWD relies on strong assumptions rarely met in practice. Nonetheless, by combining the results generated by different study designs, we can better estimate the risks and benefits of treatment strategies.80 For example, cohort studies using RWD suffer from unmeasured confounding, whereas the use of instrumental variables relies on instrumental assumptions.81 Because these two study designs require different sets of assumptions, we can estimate the extent of assumption violation and its impact on the risk estimates. Similarly, we can further incorporate the results from natural experiments82 and negative control analyses83 to gauge the effects of treatments and unmeasured confounders. By comparing the results from different approaches, we can determine the possible range of the true estimates with a greater level of confidence. Nonetheless, given the fact that different study designs and analytical methods possess distinct pros and cons, researchers need to be vigilant about the interpretation of their combined results.80 Effect triangulation approaches have successfully estimated causal effects in settings with strong confounding, such as the effects of lowering systolic blood pressure on the risk of coronary heart disease.80, 84

Leverage new statistical approaches for causal analyses

Philosophers have attempted to understand causality since the age of enlightenment,85 and epidemiologists proposed several criteria to evaluate the linkage between causes and effects.86 Recent developments of statistical methods allow causal inference from observational data while minimizing biases insurmountable by conventional approaches.70 As an illustration, in the presence of time‐varying confounders, traditional variable adjustment approaches will inevitably result in biases, due to the fact that subsequent measurements after the baseline are likely affected by the treatments. The g‐methods, a group of statistical approaches, can account for the time‐varying confounders and treatment‐confounder feedback that commonly reside in observational data.87, 88, 89 In addition, recently developed multiple robust statistical approaches can reduce the risk of model misspecification by relaxing the assumptions needed to achieve unbiased estimates.90, 91 For example, doubly robust methods can consistently estimate the effects of treatments if either the confounder‐treatment relation or the confounder/treatment‐outcome relation is correctly modeled,90 which would be helpful in settings where model misspecification raises significant concern. It is worth noting that the causal identification conditions (i.e., exchangeability, positivity, and consistency) still need to hold in order to get accurate effect estimates. In high‐dimensional settings, machine‐learning approaches can model the high‐level interactions among the variables and facilitate dimension reduction.92 These methods can accommodate the large number of variables extracted from EHRs and other RWDs.

Establish a robust infrastructure for randomized registry trials

Integration of EHRs into a large‐scale data registry would allow for real‐time matching against clinical trials and for physicians to be immediately notified if a patient was a potential fit.93 After patients were enrolled in the trial, the treatment could be randomized and follow‐up could occur at their normal point of care.94 This would have the potential to massively reduce enrollment and follow‐up costs while increasing the diversity of populations included in clinical trials. This cost reduction could enable randomized trials to answer a wider scope of questions. In particular, it may allow randomized registry trials to be performed postapproval for comparative effectiveness analysis and additional trials sponsored by government and nonprofit organizations.

Perform postapproval surveillance and validate that efficacy translates to effectiveness

Although clinical trials measure drug efficacy, it is important to perform postapproval surveillance to determine whether efficacy is generalizable to a broader population. Postapproval surveillance would allow drug pricing to take into account the real‐world value delivered. In addition, due to size restrictions, clinical trials may not capture rare adverse effects95 or drug–drug interactions96 that could be discovered through RWD analysis.

Use RWD to measure how closely trial populations resemble real‐world populations

This would enable trial organizers to plan representative trials and regulators to determine where additional trials may be necessary. In addition, if there is truly a heterogeneity of effects, it is unlikely to be detected in a homogenous trial population. It is, therefore, important to monitor both effectiveness and safety in the larger heterogeneous population that the therapy is given to.

Preregistration of RWD analyses

Establishing a preregistration requirement for RWD analyses is an effective approach to reduce the risk of multiple hypotheses testing and p‐hacking.97, 98 However, many observational datasets, such as Medicare,99 Medicaid,100 and Marketscan,101 are available and fully accessible to the researchers before the conception of specific RWD studies, which makes adequate preregistration a significant challenge. Combining a preregistration mechanism with a requirement to validate the identified effects on prospective patient cohorts could mitigate the risk of false discovery due to p‐hacking.97

Complement RCTs and pragmatic trials with RWD

Although RCTs and pragmatic trials generate high‐quality evidence for establishing causality24, 102and inform real‐world practice,41, 103respectively, it is infeasible to answer all clinically important research questions by setting up a series of trials. In addition to using multiple sources of RWE to arrive at better risk estimates (“Integrate Results From Multiple Designs of Observational Studies to Triangulate Effect Estimates” section), researchers could further leverage the hypotheses generated by RWD to inform trial design, or when the subgroup analyses of trials are underpowered, conduct RWD studies to further identify the participants who would likely benefit from the treatments under study.104

Establish reporting guidelines of RWE

Many reporting guidelines have been established for observational studies, but the specific requirements for using RWE for regulatory approval remains unclear. Widely accepted guidelines for academic publication include the REporting of studies Conducted using Observational Routinely collected health Data (RECORD)105 and STrengthening the Reporting of OBservational studies in Epidemiology (STROBE) guidelines.106 However, researchers have called for more stringent and specific approaches for subfields of epidemiology, such as the RECORD‐PE statement for pharmacoepidemiological research.107 Similar efforts are needed to enhance the validity and reproducibility of RWE for regulatory purposes. In particular, regulatory agencies need to set specific requirements for the study participants, variables, measurement methods, data curation procedures, analytical approaches, and accessibility of the data and codes used in demonstrating the effectiveness of treatments using RWD.

Establish a structure for the postapproval evaluation of clinical practice guidelines and predictive algorithms

Historically, postapproval marketing of drugs involved a wide network of pharmaceutical sales representatives attempting to visit physicians to provide samples and detailing.51, 108 In the 21st century, there has been an increased interest in establishing standardized clinical practice guidelines, for example, Choosing Wisely,109 and in providing predictive drug recommendations as a part of personalized,110 and precision medicine,111 Standardizing care is a core component of improving the process with which care is delivered in the structure, process, and outcome framework for evaluating healthcare quality.112 Personalized drug recommendations offer the promise to provide patients with the drugs they are most likely to benefit from. This transition from individualized decision making presents a great potential to improve care and to deliver evidence‐based medicine. However, it also presents the potential for postapproval marketing to shift from one‐on‐one encounters to influence at the system level through guidelines and algorithms. It is critical for RWE‐based guidelines and recommendation systems to be thoroughly evaluated by independent third parties in the form of regulatory agencies and physician societies.

Determine value‐based reimbursement of drugs

Value‐based drug pricing has been discussed for over a decade.113, 114, 115 RWD analyses can reveal the actual effectiveness of the drugs in real‐world use cases, and, hence, inform the value created for the patients.116 Tracking the longitudinal health outcomes of patients receiving the treatments under the usual circumstances of healthcare practice is crucial for determining the true value of therapies. If it is to succeed, how value is attributed must be carefully regulated and monitored.

Conclusions

RWE presents a unique opportunity to accelerate the development of new therapies and to evaluate both the efficacy and the effectiveness of these treatments. However, researchers and regulators should take heed of the limitations of RWE and the potential biases lurking in RWD. Although there is significant value in utilizing RWE preregulatory approval (e.g., identifying subpopulations of need) and postregulatory approval (e.g., safety and surveillance), there are significant barriers to reliably using observational data as a key component of the regulatory process. Conflicting studies on attempts to replicate clinical trials using RWE show the potential risks and brittleness of RWE‐based comparative effectiveness.24, 25 This view is further supported by the discrepancies between trial results and those from RWD,117, 118, 119, 120 including a recent failed attempt at Facebook121 to replicate RCTs with large‐scale RWD analyses in a nonmedical context.

The appropriate use of RWE must be driven by forward‐thinking best practices, guidelines, and regulations to avoid spurious or biased findings. Increased data availability presents many opportunities but also brings with it the potential for biases in a system with outsized financial incentives. Traditional approaches, including preregistration, may not be sufficient when it is not possible to know which analyses have already been performed. It is critical to acknowledge the history and strengths of traditional RCTs especially in regard to initial approvals. RCTs should not be replaced for approval but can be supplemented to better understand treatment effectiveness in the real world. Prospective follow‐up studies driven by unconflicted parties will be critical to decision making around postapproval therapy surveillance and reimbursement. The appropriate use of RWE offers promise to accelerate the development of therapies while making their delivery safer, more targeted, and more efficient in real‐world settings.

Funding

This study was funded by the National Library of Medicine (NLM) T15LM007092 (B.K.B.‐J.), Harvard Data Science Fellowship (K.‐H.Y.), and National Institute of General Medical Sciences (NIGMS) T32GM007753 (S.G.F.).

Conflict of Interest

All other authors declared no competing interests for this work.

Disclosures

Dr. Prasad reports receiving royalties from his book Ending Medical Reversal; that his work is funded by the Laura and John Arnold Foundation; that he has received honoraria for grand rounds/lectures from several universities, medical centers, and professional societies, and payments for contributions to Medscape; and that he is not compensated for his work at the Veterans Affairs Medical Center in Portland, Oregon, or the Health Technology Assessment Subcommittee of the Oregon Health Authority. Dr. Beaulieu‐Jones reports owning equity in Progknowse Inc. outside of the submitted work. Progknowse is a company working with academic and community‐based health systems to integrate clinical data and enhance data science capabilities.

Contributor Information

Brett K. Beaulieu‐Jones, Email: ude.dravrah.smh@senoj-ueiluaeb_tterb.

Kun‐Hsing Yu, Email: ude.dravrah.smh@uY_gnisH-nuK.

References

1. Jarow J.P., LaVange L. & Woodcock J.Multidimensional evidence generation and FDA regulatory decision making: defining and using ‘real‐world’ data. JAMA 318, 703–704 (2017). [Abstract] [Google Scholar]

2. Corrigan‐Curay J., Sacks L. & Woodcock J. Real‐world evidence and real‐world data for evaluating drug safety and effectiveness. JAMA 320, 867 (2018). [Abstract] [Google Scholar]

3. US Food and Drug Administration . Real‐world evidence <https://www.fda.gov/science-research/science-and-research-special-topics/real-world-evidence> (2019).

4. Cave A., Kurz X. & Arlett P. Real‐world data for regulatory decision making: challenges and possible solutions for Europe. Clin. Pharmacol. Ther. 106, 36–39 (2019). [Europe PMC free article][Abstract] [Google Scholar]

5. Sherman R.E. et al Real‐world evidence – what is it and what can it tell us? N. Engl. J. Med. 375, 2293–2297 (2016). [Abstract] [Google Scholar]

6. Final report on the adaptive pathways pilot<https://www.ema.europa.eu/en/documents/report/final-report-adaptive-pathways-pilot_en.pdf>

7. Herbst A.L., Ulfelder H. & Poskanzer D.C.Adenocarcinoma of the vagina. Association of maternal stilbestrol therapy with tumor appearance in young women. N. Engl. J. Med. 284, 878–881 (1971). [Abstract] [Google Scholar]

8. Cooper W.O. et al Major congenital malformations after first‐trimester exposure to ACE inhibitors. N. Engl. J. Med. 354, 2443–2451 (2006). [Abstract] [Google Scholar]

And the list goes on: https://europepmc.org/article/MED/16760444

Hinterlasse einen Kommentar